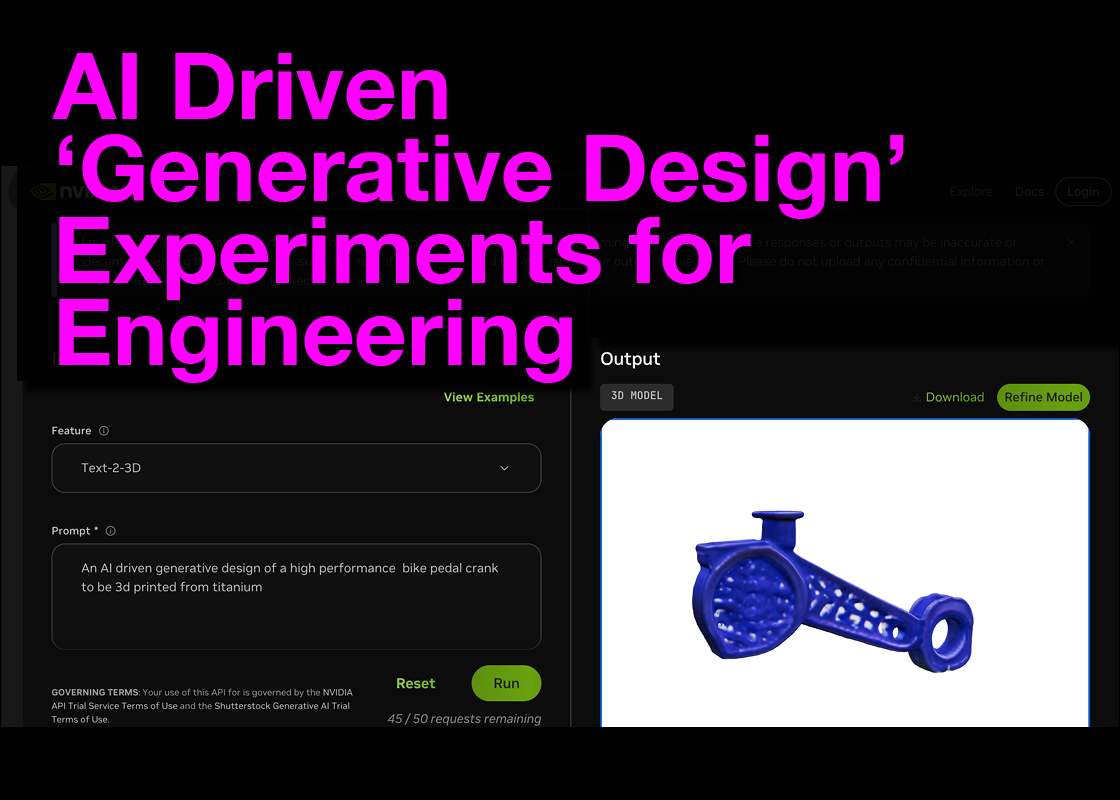

AI Driven 'Generative Design' Experiments for Engineering

March 2024 edition

As we continue on the path towards ‘AI-Assisted Engineering’, which seems like an inevitable direction we are taking, it is worth taking stock of where we currently are, and to consider what actions will pave the path forward to ensure it is not full of unseen potholes or wildly off course.

If we consider the future of design, the furthest our collective imaginations seem to be taking us is to 'Generative AI for Engineering,' whereby a well-defined prompt or requirement given via natural language will result in a fully engineered physical artifact. This artifact will meet all performance and manufacturing requirements for the full lifecycle of the product, from cradle to grave.

While I bow down to our supreme AI overlords in pursuit of this future, we are not there yet.

NVIDIA, clearly at the forefront of realizing this reality with the necessary hardware infrastructure to train and run these systems, along with the software tools and framework provided by the Omniverse Platform, enables companies to develop 3D applications using these resources.

They have assembled a proof of concept API with Shutterstock called Edify-3D that, while not aimed at engineering, offers a glimpse of where the technology stands (as of March 27, 2024), and illustrates how far our current conceptualization of Generative AI for Engineering is out on the horizon.

Let’s start with a typical mechanical engineers first thought when using cutting edge computational design and advanced manufacturing, an over engineered bicycle component to reduce the weight of their bike and impress their other engineer-type friends.

Using the prompt ‘An AI driven generative design of a high performance bicycle pedal crank to be 3D printed from Titanium’ we get this result.

And yes, fair point, asking it to be ‘AI Driven’ is kinda like saying ‘ATM Machine’ but it is also often what we are referring to when we are discussing ‘generative design’, we expect something ‘futuristic and/or organic’, and what we get is something that ‘looks right’ (to a certain degree), but not something that performs.

So let’s try ‘A high performance bicycle pedal crank with a length of 175 mm to be used by a 90kg rider on smooth asphalt roads to be 3D printed from Titanium’.

And while this is a far from complete specification of requirements it is a little less referential to the our AI process and aesthetic, and a little more descriptive which gives us this result.

NVIDIA’s EDIFY-3D proof of concept has never claimed that it was developed for generative engineering, it synthesizes geometry based on how something looks, not how something performs.

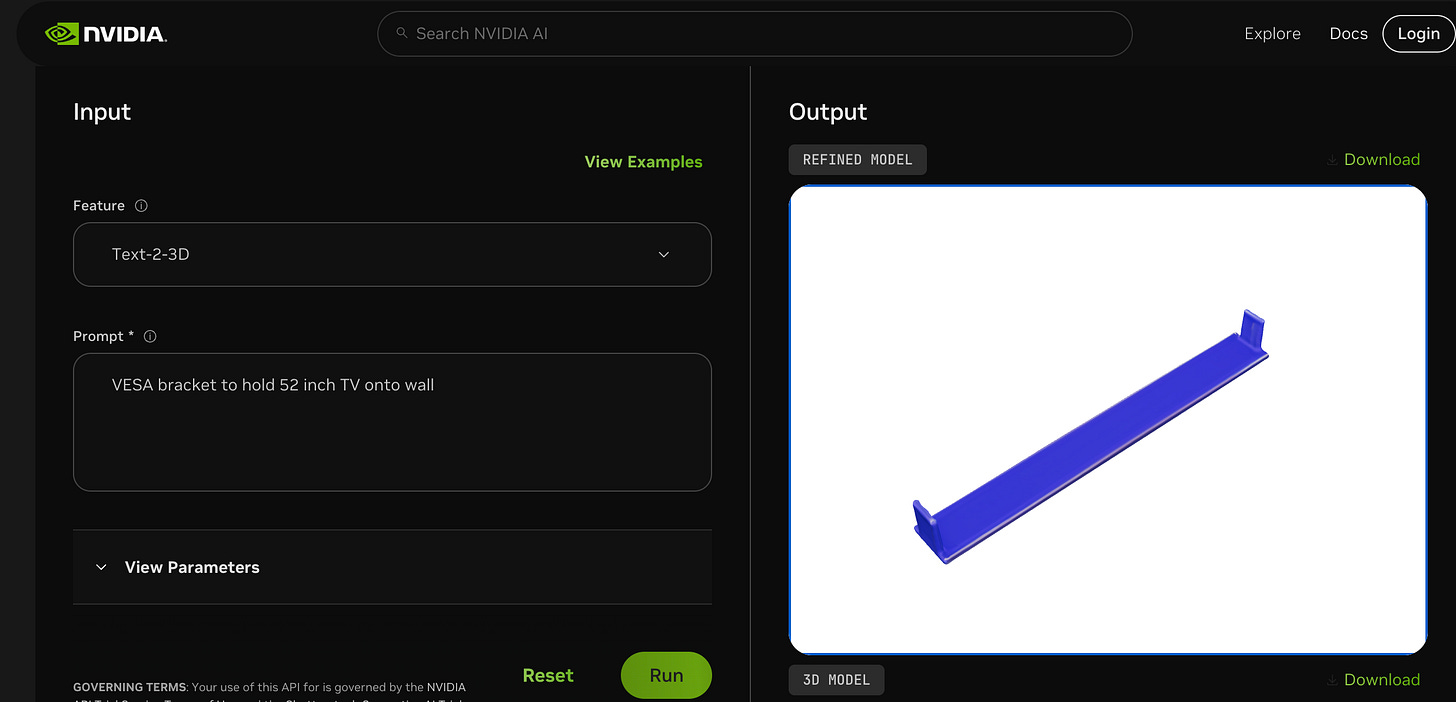

So we see when we define something by performance requirements, we are nowhere close to getting a performant part, well known and documented examples like a VESA bracket also fails to produce anything resembling a functional geometry.

Researchers from multiple institutions including MIT, Harvard and University of Washington’s How Can Large Language Models Help Humans in Design And Manufacturing? (published July 25, 2023) went way deeper into exploring some similar approaches and a paper by MIT and DTU explored FROM CONCEPT TO MANUFACTURING: EVALUATING VISION-LANGUAGE MODELS FOR ENGINEERING DESIGN, all pushing against the boundaries of what is currently possible with these models and some of the challenges these systems have to solve engineering problems.

NAVASTO, who have been developing AI-Assisted Engineering software for close to a decade that uses surrogate models to accelerate design cycles by reducing the need for computationally heavy simulation have considerable traction in the automotive industry have also used NVIDIA and proven applications with General Motors, Volkswagen, Audi and more.

These processes require performance data, not just how something looks.

Altair helps their customers to prepare for ML models with RapidMiner, dedicated Artificial Intelligence (AI) and Data Analytics Solutions to drive their DesignAI software.

UPDATE:

Altair published a clear and informative article on “A Human's Guide to Generative AI” that is really worth reading.

So, when it comes time to over-engineer a part for your bike or produce a high-performance component you actually need, exploring and drawing inspiration from the text-to-mesh experiments might be fun, similar to how some architects use Midjourney to produce renders of buildings that will never be built.

But when it comes time to create something functional, there's still work to be done in collecting, cleaning, tagging, training, and testing before you get your perfect crank.

Register to attend CDFAM Berlin to learn from and connect with people at the forefront of AI in engineering, from materials development, part optimization and process development, through to the design and optimization of architectural systems.

Experts from NAVASTO, MIT, 1000Kelvin, Altair, New Balance, Carbon, Cognitive Design Systems, Nano Dimension, Royal HaskoningDHV, Barnes Global Advisors and Oqton will all be discussing how they are currently integrating AI and machine learning into engineering workflows at every scale.

Updates: March 29

Since publishing I found this presentation by Faez Ahmed, Assistant Professor, MIT Mechanical Engineering, on engineering design and AI.

And an article published today on From Automation to Augmentation: Redefining Engineering Design and Manufacturing in the Age of NextGen-AI by Md Ferdous Alam, Austin Lentsch, Nomi Yu, Sylvia Barmack, Suhin Kim, Daron Acemoglu, John Hart, Simon Johnson, and Faez Ahmed at MIT.

I was also made aware of TRIPO3D that made things that ‘look’ closer to engineered geometry, but are still not engineered geometry.

From the prompt "VESA bracket to mount 52 inch tv to a wall"