Interview with Josefine Lissner on Algorithmic Engineering

Rocket Science, DfAM and AI in Engineering

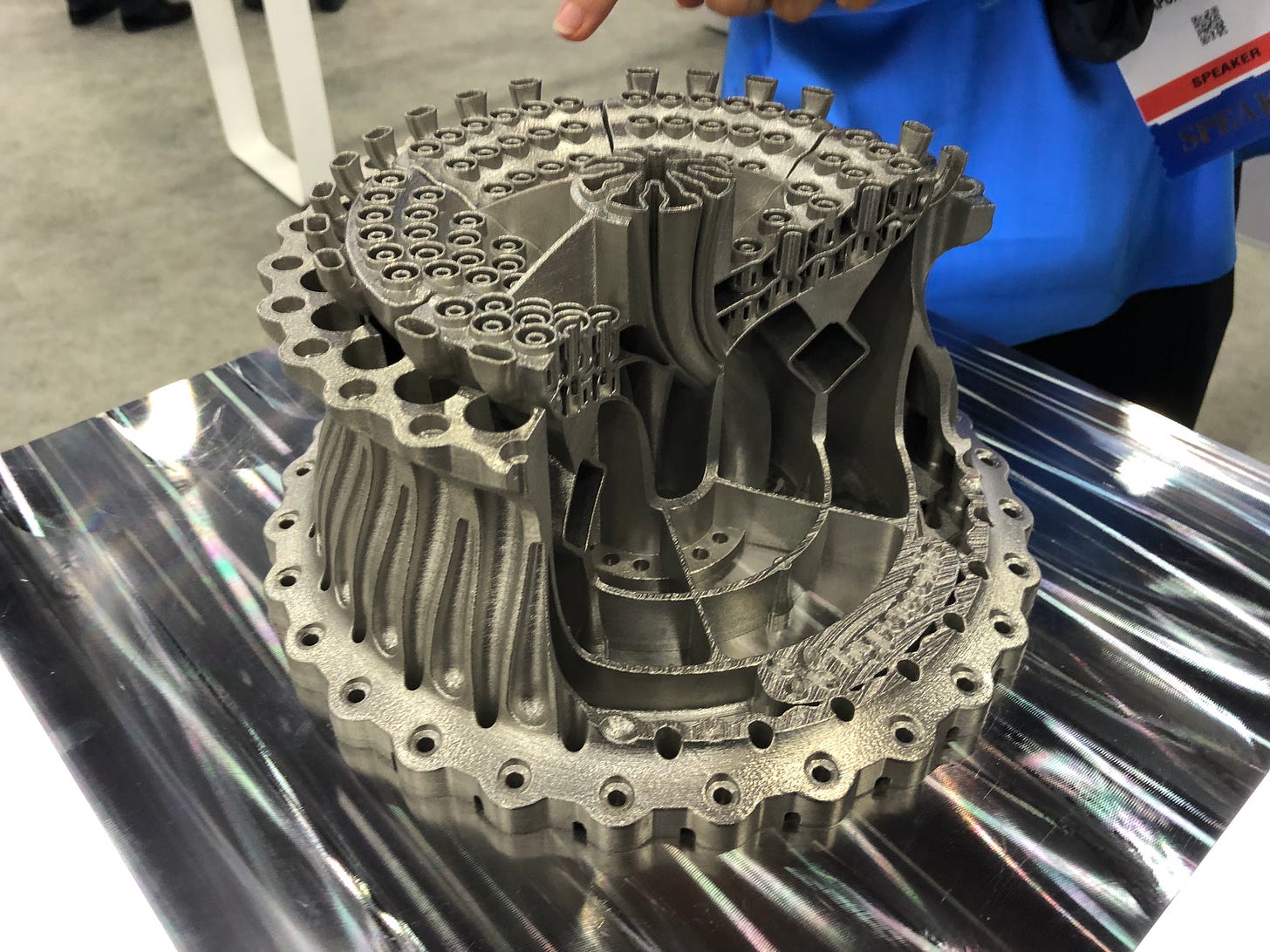

When I first spotted the Aerospike Rocket Engine showcased by Hyperganic and EOS I was really curious about how they came about the geometry in the design, as although I have seen a number of algorithmically driven designs including rocket engine components, I had never seen any thing that looked quite so, alien.

Knowing it would be on display at the EOS booth at Rapid TCT 2022 I made sure to stop by and check out the design in person and was lucky enough to speak to Josefine Lissner who is responsible for the design, to learn about the process she used to define the requirements that guided the software to generate the rocket engine.

Q.

Can you tell us a little bit about yourself, your education and how you came to designing rocket engines at Hyperganic?

A.

I am an Aerospace Engineer by training. I picked the studies originally because of the lectures on aerodynamics that would allow me to work in Formula One one day. That was my “dream” by the time I finished school. I ended up working for the Mercedes AMG Petronas Formula One Team and the newly founded Porsche Motorsport Formula E Team eventually.

Although I loved my work, I had the sense that as an engineer I should probably work on something more meaningful. I started to teach myself how to code, because I figured that it is a must-have skill for a good engineer of the 21st century. I quite enjoyed it. Then I had the chance to participate in an Astronaut training session at ESA and that got me completely hooked on space flight and rocketry.

I would google everything I could find on how rocket engines work and I would eventually come across Hyperganic’s iconic rocket picture on my Google News feed.

I applied for my Master thesis. Me and Lin - CEO and Space buff himself - quickly converged on the idea that I should try to design my own rocket engine using the Hyperganic Core technology…

Q.

Can you go into more detail about how Hyperganic software works, how you set up the conditions to define the performance requirements that then define the geometry?

A.

Hyperganic is a platform that gives engineers the tools to build their own applications and workflows, just like the operating system Microsoft Windows enables developers to build Apps like Word, Internet browsers and all sorts of things.

We are not dictating/constraining users on a given workflow. This means that we can provide the tool to algorithmically generate geometry, we have optimisation algorithms, print preparation and soon in-house simulation capabilities. But it is very much up to the engineer to come up with a meaningful workflow.

We are not selling a blackbox where engineers load a file and push a button. If all companies use the same blackbox tools, they have nothing to differentiate themselves from their competitors.

Depending on the application, there are different options.

We can automate straight forward mass-customization workflows. Every time a different biomedical scan input goes in and the algorithm produces a medical device/consumer product that is uniquely tailored to fit the patient/customer. No iterations, optimizations, feedback-loops happening here.

For more complex objects with different objectives you will have to iterate. If you have no clue about how design features trade off, you are best to generate many variants, explore a large solution space and test these variants against a fitness function. Think of genetic optimizations that mimic the process of evolution.

If you want to include more engineering knowledge into the process, e.g. rules on how to react when certain things happen, you can build a feedback loop. For instance you are simulating a structural component and you find that the wall thicknesses are too thin in certain aeres. Then you can trigger a decision process where the walls are locally thickened before you go into the next cycle of iterations.

So there are many ways of going about it. At the core of it we are automating the manual drawing process and allow the engineer to encode their knowledge on how to design and solve problems.

Q.

Complex designs such as the aerospike rocket engine have different performance requirements in a single unified object, what would previously have been a series of discrete components assembled together can now blend together with the same material throughout the design space due to the additive manufacturing process.

Onur Gun talks about this as the Part & Whole Theory, where additive manufacturing allows us to really rethink design as not just part consolidation but function consolidation but unexpected things can happen at the boundaries.

How do you prioritize which performance functions drive the geometry first and how do you blend the components together to ensure it does not introduce unintended consequences where the boundaries meet?

A.

How to prioritize conflicting requirements is still very much up to the engineer. The algorithms are basically the interpretation of the human’s thought process. Also, the engineer still needs to figure out what he wants to optimize for, how he reacts to a simulation result and how he architects a feedback loop.

The interesting aspect about Algorithmic Engineering is that you detach yourself from visual building blocks and physical interfaces.

Traditionally two aspects with different functions might manifest themselves as two separate parts that need to be bolted together. So you would design for a physical interface like a flange.

With Hyperganic’s approach you think in terms of logical interfaces where two components exchange data in order to negotiate their final shape, but the different functional requirements will not hinder them from growing together and becoming one integral part at the end.

So, we effectively model in terms of functional components rather than physical components. This also opens up the opportunity to spatially spread out functionality across multiple physical parts.

And with this regard we are again moving closer to what we see in nature: high-levels of functional redundancy on many, many components that all blend together (think of a tree).

Q.

In our previous conversation we discussed how there is a lot of marketing material that claims generative design is driven by AI when really it is a set of computational and simulation rules driven by physics and math equations that make an approximation of what happens in reality to create geometry to meet a set of constraints.

The lack of real world data is what makes it difficult to train an AI for the design of complex, high performance components so for now we rely on simulation.

We could train an AI based on simulation algorithms/synthetic data but again it would be an approximation that may be better at bypassing simulation, than actually creating geometry.

Where do you think we can first apply AI to design and what do you think the path is to true AI driven design?

A.

We need to build the data set first.

Image-based AI’s like Dall-E produce such astonishing visual results, because they have the entire internet full of labeled pictures. But just because we can artificially create images of realistic-looking spacecrafts, it doesn't mean that it is a functional spacecraft.

The step from “looks good” to “actually works” is huge! And just to be clear: We do not have a machine readable database of engineering knowledge… yet!

If we shift engineering to a software paradigm, we encode that knowledge into algorithms. So in a sense we are doing the first step and starting to build this database of “how things work”. Using that to train an AI to create functioning object using the existing code modules will be the logic next step.

You might be familiar with GANs (Generative Adversarial Networks) for image-based AI’s. There is one network that synthesizes a picture of a dog and the other network classifies it. These two networks are trained against each other until even a human is convinced that the picture shows a dog. I see a similar layout work out for geometry generation.

We can train a classifier AI by running it in parallel with every physical simulation that we do on the Hyperganic platform. At some point the AI can be used to discard vast amounts of the solution space (classification) and save us a lot of simulation hours. At some point it can predict the entire simulation result. However, on the opposite side, we have a synthesizing AI that operates on existing code modules in order to create new engineering objects until the classifier says “this object is working”, “this plane can fly”.

It’s hard to say when this will come reality. We are taking the first steps. I would say, before expecting the AI do somehow develop a machine understanding, it is easier to train it to become a “user” of the algorithms that humans have encoded. We will see!

Q.

Your role at Hyperganic has you working on design systems ranging from rocket nozzles to biomedical devices, how does the code/modules from one application inform the other, what are the fundamental design principles that are inherent in all applications?

A.

When you use algorithms to solve a certain problem, you are initially working on a more abstract level. For example, when I wanted to route cooling channels around the rocket’s combustion chambers, I would develop an algorithm that is generally solving the problem of building some tube-like structures across the surface of some other base shape. Once I have defined rules of how that generically has to be solved, then I can tailor the algorithm towards my specific problem. So the tube-like structures would very concretely be replaced by rectangular, functionally-driven cooling geometries. The base shape would very concretely become the combustion chamber. The benefit of that lies in the level of abstraction.

The more abstractly I can solve a problem the more I can deploy that solution principle to other engineering domains.

In another project, I designed an artificial lung bubble (so-called Alveole). I used the same routing algorithm to design the capillary system. Instead of rectangular cooling channels, I would input round tubes that later become the soft capillary wall tissue. Instead of the combustion chamber, the base shape would be represented by the air sacs of the Alveole. For the Aerospike engine the routing was very functionally driven, e.g. how does the swirl angle / channel height, channel width behave over the chamber length. For the biological application I would assign artificial randomness in order to mimic organic looking networks.

This kind of cross-pollination is increasingly apparent and growing as we are building our library of existing algorithms. Algorithmic Engineering allows for multidisciplinarity and I see that as a key pillar of innovation. Personally, I always saw myself as a generalist rather than a specialist, so I find a lot of joy in working on such vastly diverse topics. Usually, rocket engineers don’t talk to biologists so often, for me these two applications have a great deal in common.

Q.

How do you validate and/or compare the designs generated by the software.

A.

Depending on the use case / industry, we have two criteria of interest. On one side, we compare the entire workflow and evaluate how much time we can save by automating manual design steps through Algorithmic Engineering and using AM fabrication. Regardless of how complex the solution is, if we can shorten the iteration cycles, products can enter the market sooner or can be refined to a new level within the same amount of time. We have this focus with most of the consumer products and mass-customization workflows.

On the other hand, we want to actively push innovation.

Boldly speaking, if our geometries are doable in or comparable to manual CAD, we have already lost.

Ideally, we come up with designs and technical solutions that cannot be generated with existing tools. If that’s the case, we have truly innovated and all comparisons against CAD drawings become obsolete.

Q.

What advice do you give to people when they are approaching algorithmic, requirement driven design vs CAD based modeling.

A.

Learning how to code is a learning curve, but it is totally worth it.

Our CEO always says it’s like going from a mechanical calculator to Microsoft Excel. You are so much more productive. I was wasting my time when I did CAD modeling of the same airfoils over and over again during my time in Formula One.

Now, I am supercharged by everything that I have done in the past. Once I have an algorithmic model of such an airfoil, I will never build one again. I can recycle and extend my existing model, which leaves me to spend more time on new stuff.

My hope is that becoming an Algorithmic Engineer will be “fashionable” for young engineers, who want to do things differently and smarter compared to what they learn in university. In my romantic opinion, engineers are the inventors of the physical world around us. Yet, I see a tendency amongst engineers to stick to the tools they have gotten used to.

We need to showcase what computational methods can enable in terms of novel technical solutions and create a desire for them to overcome this initial hurdle of learning how to code. Personally, I don’t understand this discrepancy, because engineers are already logical people. They execute a step-by-step algorithm in their heads when they manually execute a CAD drawing. We should stop telling people that coding is hard! It’s not.

Q.

Thank you so much for your time, is there anything you would like to close out with?.

A.

I encourage every young engineer who wants to stay relevant or -even better- actively shape an exciting future and drive innovation, to acknowledge the limitations of existing CAD workflows and become an Algorithmic Engineer!

If you would like to get access to Hyperganic Core to explore how algorithmic engineering may be able to help solve your design innovation problems.

To support articles such as this, consider becoming a paid (or free) subscriber and reach out if you are interested in contributing an article for the DfAM Substack.

You may also be interested in.