Learning to Generate Shapes with AI at Autodesk

CDFAM Speaker Series Interview with Karl D.D. Willis

Continuing the interview series on the exploration of artificial intelligence and engineering, we spotlight the cutting-edge work of Karl D.D. Willis and his team at Autodesk Research AI Lab. They're expanding the horizons of 3D generative AI research, focusing not just on creating 3D models but also mechanical systems assemblies.

Their work goes beyond just visualizing shapes, diving into functionality and modeling of components. Willis shares insights from his upcoming presentation at the CDFAM, discussing everything from parametric CAD generation and the use of simulation for enhanced results to the concept of a 'Clippy' for engineering.

Today (May 15th) is the last day to register for discounted tickets to attend CDFAM.

Could you start by describing your current role as a Senior Research Manager at Autodesk and sharing some details about the projects you are currently working on?

I work in the Autodesk Research AI Lab. We work on learning-based approaches to solve problems with the design and make process, be it manufacturing, architecture, or construction.

Can you share an overview of the topics you plan to cover in your upcoming presentation at CDFAM, titled ‘Learning to Generate Shapes’ and how it relates to these projects?

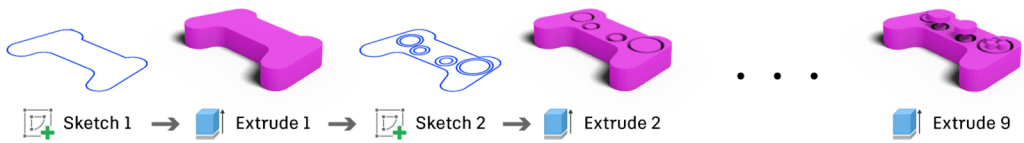

There has been amazing progress with text and image generation using machine learning. We have also seen some great initial work with 3D shape generation. However, one area that remains in its infancy, and will be the focus of my talk, is the generation of editable shapes that a designer can directly manipulate. One example is generating and editing vector graphics such as SVG (see how ChatGPT can attempt this). More specific to manufacturing, we might want to generate parametric CAD models complete with modeling history and constraints.

As you and your team have been exploring machine learning at Autodesk Research AI Lab for a number of years, how has the recent surge of interest and development in large language models like ChatGPT, as well as the early examples of Point-E text to 2D to 3D generative AI, influenced your research?

One of the great things about having research happen out in the open is we can very quickly stand on the shoulders of giants to advance what is possible with ML.

Models like CLIP have had a huge impact on the field and the Autodesk AI Lab has been quick to leverage them, for example, we published the first text-to-3D work in the field.

Sanghi, Aditya, et al., Clip-forge: Towards zero-shot text-to-shape generation, CVPR 2022.

Using AI to assist in CAD modeling for repetitive processes, similar to autocomplete with text or Clippy guiding a designer through a UX instead of menu diving, has the potential to save time and reduce friction in the learning curve of new modeling processes. Can you explain how you and your team are exploring and thinking about exposing this functionality?

I think autocomplete is a natural application for design for a number of reasons. Firstly the designer doesn’t need to do anything differently, the ML model does the work of interpreting their design and predicting what might come next. Secondly, the designer can opt-in or out of the recommendations provided.

Finally, autocomplete aligns well with how most language models are trained to predict the next word in a sentence.

Willis, Karl DD, et al, Fusion 360 gallery: A dataset and environment for programmatic cad construction from human design sequences. SIGGRAPH 2021.

How can we move beyond AI assisting what happens inside the software, such as 3D modeling, to AI helping with design intent and manufacturing processes that occur outside of the software?

What we can work on is determined by availability of data. The type of data we might need to understand design intent is very specialized.

I think of it as understanding the different levels of a design, e.g. a trimmed cylinder surface, made with the fillet feature, forming a rounded internal corner, to avoid stress concentrations and reduce machining cost. Obtaining this granularity of data for all aspects of the geometry is challenging, so it’s an open question right now how to tackle this area.